Emily Lakdawalla • Oct 13, 2009

That psychedelic M-cubed Moon movie explained

Advance warning: this entry may be a little technical for some. I hope that the following makes some sense! When I posted my writeup of the Chandrayaan-1 Moon Mineralogy Mapper (M3) results detailing the discovery of widespread water/hydroxyl in near-surface lunar rocks, I included this really cool-looking video. It was described as a random walk through the M3 data but there wasn't any more description of what it represented. (Check it out on Facebook for HD resolution.)

Thanks to the video's creator, Joe Boardman of Analytical Imaging and Geophysics ("the other AIG"), I can now tell you a little bit more about what the video represents. First I'll give Joe's explanation, which he sent to the lunar listserv, then I'll try to explain what he's talking about.

These are from the very early Optical Period 1 data, so it's partial near side coverage. I took the data and did a rough calibration and then did a standard Principal Components on the 86 bands of Global Mode data. Happily we see at least 12 coherent spectral dimensions in the data, even at that early stage of calibration.

I then created an animation that is meant to start you off with the moon you know, true color (or lack of it). Then it sweeps you through (in 933 animation frames) what we call a Grand Tour of the first 12 PC imagers of the data. Imagine the RGB 3-d frame of the color composite is a "window" through which you are looking into the 12-d space. Then imagine turning that window to progressive random look directions in 12-space, all the while following a minimum-spin smooth path called a geodesic.

It ends by taking you back to true color (or lack of it) and hopefully wanting more of the "colorful Moon". With apologies to MGM and the Wizard of Oz.

Phew. OK, what does that mean?

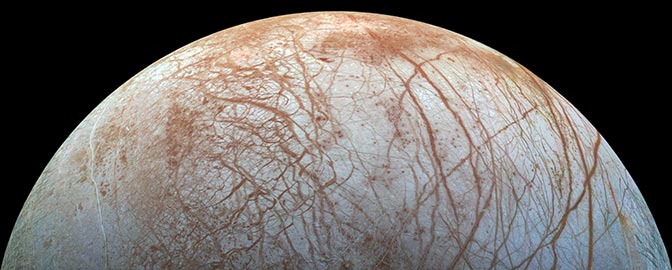

To begin with, M3 is an imaging spectrometer. It takes images of the Moon, like a camera. But each pixel in its image contains a wealth of spectral information. For M3, each pixel includes data on how that spot on the Moon reflects light at 86 different slices of the electromagnetic spectrum. I explained this in a bit more detail in my original story on the detection of water on the Moon.

It can be really hard to get a handle on a hyperspectral data set like M3's. You can pick one wavelength and look at how each point in the image reflects light at that wavelength. That gives you a basic black-and-white image of the Moon. You can pick three wavelengths and use those three grayscale images to make a single RGB color image of the Moon, but that still doesn't make use of 83 of the 86 bands available in the M3 data; what's worse, there are more than 100,000 unique 3-member combinations that you could make from that 86-band data, any one of which only gives you a view into 3 of the data's dimensions.

How better to handle 86-dimensional data? Why, you graph it in 86-space, of course. I won't pretend I can imagine 86-space. But a computer has little more difficulty with 86-space than it does with 3-space. You can treat each pixel of the M3 data like an ordered pair on the plane or ordered triple in 3-space -- except it's an ordered 86-set, in 86-space.

That's probably a little mind-bending. How can this possibly help us? Well, the distribution of points is not going to be random. There's likely to be some clustering of points in that 86-space. For instance, the lunar maria are darker in most wavelengths than the lunar highlands. So you'd expect to see two big clusters of points in that 86-space, a big pile down at the lower end of the reflectance scale and another big pile at the upper end of the reflectance scale, in just about every dimension in 86-space. If you could see in 86 dimensions, you'd probably notice other clusters too. Those clusters would represent pixels that have somewhat similar characteristics across all 86 dimensions of the M3 data.

But how can we "see" that clustering? Most humans can manage to comprehend three spatial dimensions at most. What statisticians do when confronted with a data set like this is an operation called principal components analysis, or PCA. Broadly, PCA is a way to extract information on the variability contained within a data set. Imagine that the data is a cloud of points and remember how I said there was probably a cluster at the low end of the scale and a cluster at the high end of the scale? Plot a best-fit line through all those points, drop perpendiculars from every one of the points to that best-fit line to see where each point falls along that axis, and you've got your first principal component, which will be a measure of how "mare-like" or "highlands-like" the point is.

You have, in effect, transformed the data set to a new coordinate system. But that first principal component doesn't account for all of the variability in the data set. So now find a new vector in 86-space, perpendicular to the first one, that accounts for as much of the remaining variability as possible, and that's your second principal component. According to what Boardman said above, there are 12 orthogonal vectors to be found in the 86-space plot of the M3 data that encode coherent (non-random) information on the variability within the image. Another way of looking at it is that we've reduced the 86-dimensional data down to 12 dimensions that still contain all the useful information about the variability within the data set, which is a big improvement.

Each of the 12 principal components can be represented as an image of the Moon, showing how each pixel within the M3 image of the Moon plots along that principal component axis. Take any three of those principal components images and make an RGB combination out of it, and now you are really exploring the variability in the data set. Twelve variables is still a lot; there are 220 unique 3-component combinations you can make of those twelve principal components. But 220 is a lot less than a hundred thousand, which is the number of combinations we were looking at to begin with.

The video above takes a random walk through many 3-component combinations of the 12 principal components images, giving a much better view of the variability within the M3 data set than you'd get if you just treated M3 like a camera. It makes no assumptions about why the variability is there within the data, Boardman told me: "It does not discriminate but equally shows the mineral signatures, the surface properties we are sensitive to, the thermal and photometric effects, and the water.

Explore Worlds

Explore Worlds Find Life

Find Life Defend Earth

Defend Earth