Heather Hunter • May 12, 2017

Radar in Earth and Planetary Science, Part 2

In part one of our introduction to radar in Earth and planetary science, we briefly discussed some basics. We learned that radar stands for “radio detection and ranging,” and that it is a sensor that generates its own electromagnetic energy, usually in the microwave portion of the electromagnetic spectrum. We also learned that radar is generally impervious to weather, so if your target is behind clouds or rain, you’ll still be able to “see” your target.

But what if we can improve our radar to take interpretable images? What if, instead of only seeing brief echoes of the radar energy reflecting off a given scene, we could create high resolution images of the reflected energy?

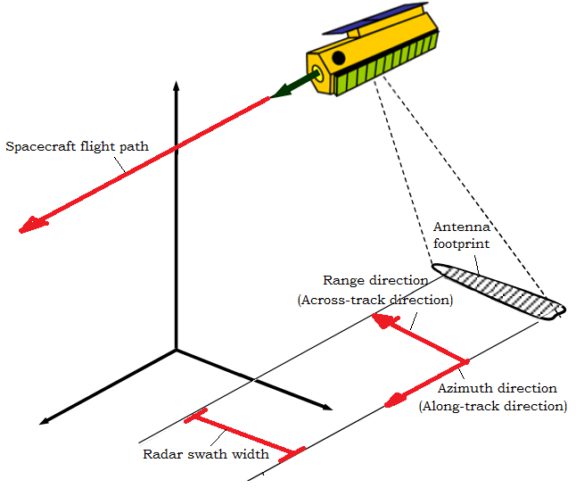

Let’s begin our discussion by imagining an aircraft carrying a radar. Images taken by radars on aircraft or satellites are acquired in long “strips” as the platform moves along its flight path, or track. Unlike optical sensors, a radar is typically pointed off to the side of the flight track, so that the radar’s energy is transmitted obliquely. The “footprint” of the radar’s energy, emanating from the within the radar’s antenna “beam” has dimensions in terms of a “range” direction and an “azimuth” direction. The term “range” refers to the dimension crossing the flight track. Alternatively, you can think of it as the dimension coming straight out from the antenna’s beam, but projected onto the ground. The term “azimuth” refers to the dimension along the flight track, projected onto the ground. These terms will be key to our subsequent discussion.

In remote sensing, the basic measure of the spatial quality of an image is its “resolution.” The term “spatial resolution” refers to the ability of our radar (or any sensor) to differentiate between objects. It is a measure of finest detail our radar can see and is typically measured in meters. There’s a measure of resolution for both dimensions of the radar image: “azimuth resolution” and “range resolution,” which refer to how well the radar can resolve objects in either direction.

Synthetic aperture radar (SAR) exists because conventional radar suffers from poor azimuth resolution—in other words, conventional radar is not very good at distinguishing objects in the direction along the aircraft’s flight direction. The azimuth resolution of the radar is dependent on the physical dimensions of the radar’s antenna—in fact, they have an inversely proportional relationship. This means that, as the radar’s antenna size grows, the resolution improves, though it also depends on other variables, like the radar’s transmitting wavelength and the distance to the target or scene in question.

How do we make the azimuth resolution better? Based on the relationship I’ve just described, we can guess a few ways this can be done: 1) increase the along-track antenna length, 2) increase the antenna’s transmitting wavelength, or 3) get the aircraft closer to the target.

It’s clear that the last option is not viable, especially for a SAR on a spacecraft. The second option is also not viable, because as we increase the wavelength of the radar’s transmitted signal, the radar requires more power to generate that signal, and we’d like to keep the power requirements for our radar as low as we can.

That just leaves us with the first option—and it’s the key behind SAR. But adding an antenna that might be 25 to 100 meters long to an aircraft or spacecraft is generally impractical, so what do we do? We use computers to simulate a long antenna using a conventional antenna, which might be just a few meters long, instead.

How is a single SAR antenna able to emulate a very large antenna? The basic concept is as follows: the SAR transmits a signal, receives the echo of its transmitted signal from a target—while the SAR is moving—and maps that received signal to a specific position. In order to effectively map the positions of whatever the received signals have bounced off of (the targets), SAR exploits the motion of its platform to determine the direction of the signal, also known as the signal’s “phase”. Without the relative motion between the SAR and the target or scene, the SAR would not be able to locate the target(s) in question.

Once the SAR has obtained multiple (usually thousands) of echo signals and mapped them to specific positions, it combines all signals corresponding to each position. With some sophisticated signal processing, the SAR concentrates the signals at each position, resulting in a synthesized image with a much improved spatial resolution than that of its regular radar counterpart. Better yet, this process generates an image using the adequately-sized, conventional antenna, meaning that we didn’t require a huge antenna that might not even fit on an aircraft or satellite.

It turns out that SAR is extremely useful in both Earth and planetary sciences, for more reasons than just its ability to see through clouds, fog, and bad weather. Since it makes careful calculations of the relative locations of objects in a scene, it can be used to determine how things have changed over time. For example, SAR imagery can help us monitor glacier movement, landmass movement after Earthquakes, movement of man-made objects, flood extent, and even the motion of ocean waves and ocean currents (though the latter is a complex discussion best saved for another day).

SAR is also used to make high-fidelity digital elevation maps (topography maps), it helps us see and track oil spills, and it can even make measurements of soil moisture. Because SAR, unlike optical images, gives us information on the intensity and direction of the signals its receives—and the behavior of the signals transmitted by SAR depend largely on the structure and dielectric properties of the SAR’s intended target (that is, how well it conducts electricity or interacts with an electric field, like a SAR signal)—we can glean a wealth of information unseen to the human eye, which gives SAR an edge over conventional sensors which rely on the visual part of the electromagnetic spectrum.

Now that I’ve sung the praises of SAR, how are some of the world’s leading space agencies making use of it?

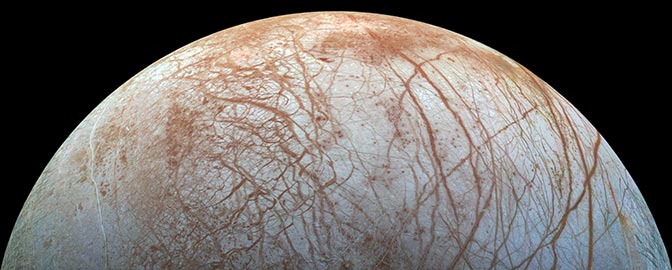

Owing to its ability to peer through clouds, the SAR on the Magellan probe had no problem mapping the Venusian surface. It is the perfect, earliest example of SAR’s advantage over optical systems when it comes to thick atmospheres. Similarly, the SAR aboard the Cassini probe gave scientists first-ever views of Titan’s surface. Recall, Titan is, like Venus, covered in clouds and haze, and SAR is the perfect fit for a cloudy atmosphere!

When it comes to the Earth, the European Space Agency (ESA) leads the way in the number of operational SAR among their Earth-observing fleet. The most recent of these include the Sentinel satellites, which are a collection of satellites that monitor the entire globe and provide data on everything from the status of Arctic sea ice, to oil spills, to other environmental information that allows the development of maps required during crisis situations and humanitarian aid operations. Sentinel-1A was launched in 2014, Sentinel-1B was launched in 2016, and the rest of the Sentinels (2–6) are either not fitted with SAR or have not yet been launched.

Now you know the very basics of SAR, and how scientists are using it study both the Earth and other bodies in the solar system. If you’re interested in learning more technical details, or you want to learn more about the applications of SAR, here are a handful of online resources to help you get started:

Let’s Go Beyond The Horizon

Every success in space exploration is the result of the community of space enthusiasts, like you, who believe it is important. You can help usher in the next great era of space exploration with your gift today.

Donate Today

Explore Worlds

Explore Worlds Find Life

Find Life Defend Earth

Defend Earth